Face Analysis using ML-Kit and TensorFlow Lite

I am wandering around and try to find a solution to develop face recognition project on Android. My goal is to run facial expression, facial age, gender and face recognition offline on Android (expected version: 7.1).

Classification/Object Detection TensorFlow Lite Example

I wandered and find the usable example from TensorFlow Github. The example based on TensorFlow lite version https://github.com/tensorflow/examples/tree/master/lite/examples/image_classification/android which provide a usable image classification example. You can build and run the example straightway in android studio (image classification, object detection):

The original example is based on Tensorflow lite models: EfficientNet and MobileNet and they each have Float and Quantized version. To adapt to our case, we first try to import our model to Tensorflow lite using the instruction on the TensorFlow website (https://medium.com/tensorflow/training-and-serving-a-realtime-mobile-object-detector-in-30-minutes-with-cloud-tpus-b78971cf1193). Thanks to this, my student built me a TFlite model for testing.

The step to add your own model for classification is simple:

- Add the dropdown options to the android application in file https://github.com/tensorflow/examples/blob/master/lite/examples/image_classification/android/app/src/main/res/values/strings.xml by adding item int string.

<string-array><item>GenderNet</item>.. </string-array>

2. Add the new classifier e.g.“GenderClassification.java” which tried to imitate the original one “ ” in the example (derived from Classifier.java). Don’t forget the setup the IMAGE_MEAN and IMAGE_STD values according to your preprocessing. This will be use in getPreprocessNormalizeOp() override.

These files are put in …./app/src/main/java/org/tensorflow/lite/examples/classification/tflite folder.

3. Modify Classifier.java https://github.com/tensorflow/examples/blob/master/lite/examples/image_classification/android/app/src/main/java/org/tensorflow/lite/examples/classification/tflite/Classifier.java

to add case for your own model.

… else if (model == Model.GenderNET) { return new GenderClassifier(activity, device, numThreads); }

Make changes to your preprocessing according in this file function in (loadImage(..)). The existing one has resize with crop, resize method, rotation if any, and normalize.

ImageProcessor imageProcessor = new ImageProcessor.Builder() .add(new ResizeWithCropOrPadOp(cropSize, cropSize)) .add(new ResizeOp(imageSizeX, imageSizeY, ResizeMethod.NEAREST_NEIGHBOR)) .add(new Rot90Op(numRotation)) .add(getPreprocessNormalizeOp()) .build(); return imageProcessor.process(inputImageBuffer);4. Copy the model file and label file to asset folder.We setup the model file and model label in the “GenderClassification.java” . We put both in asset folder: …/app/src/main/assets/

That is all if I am not forgetting anything. You can build and run the example with your own model. The example starts the camera and perform image classification according to your model selected in the bottom spinner. A bit of note here is to display the result in the bottom sheet. The LinearLayout in GestureLayout has three lines to display the top three choices. If you have only two choices e.g (Male, Female). We have to modify the display in https://github.com/tensorflow/examples/blob/master/lite/examples/image_classification/android/app/src/main/java/org/tensorflow/lite/examples/classification/CameraActivity.java

The results size is fixed in here to be greater than 3 to display. Make it 2 for example and modify the last “if” or delete it.

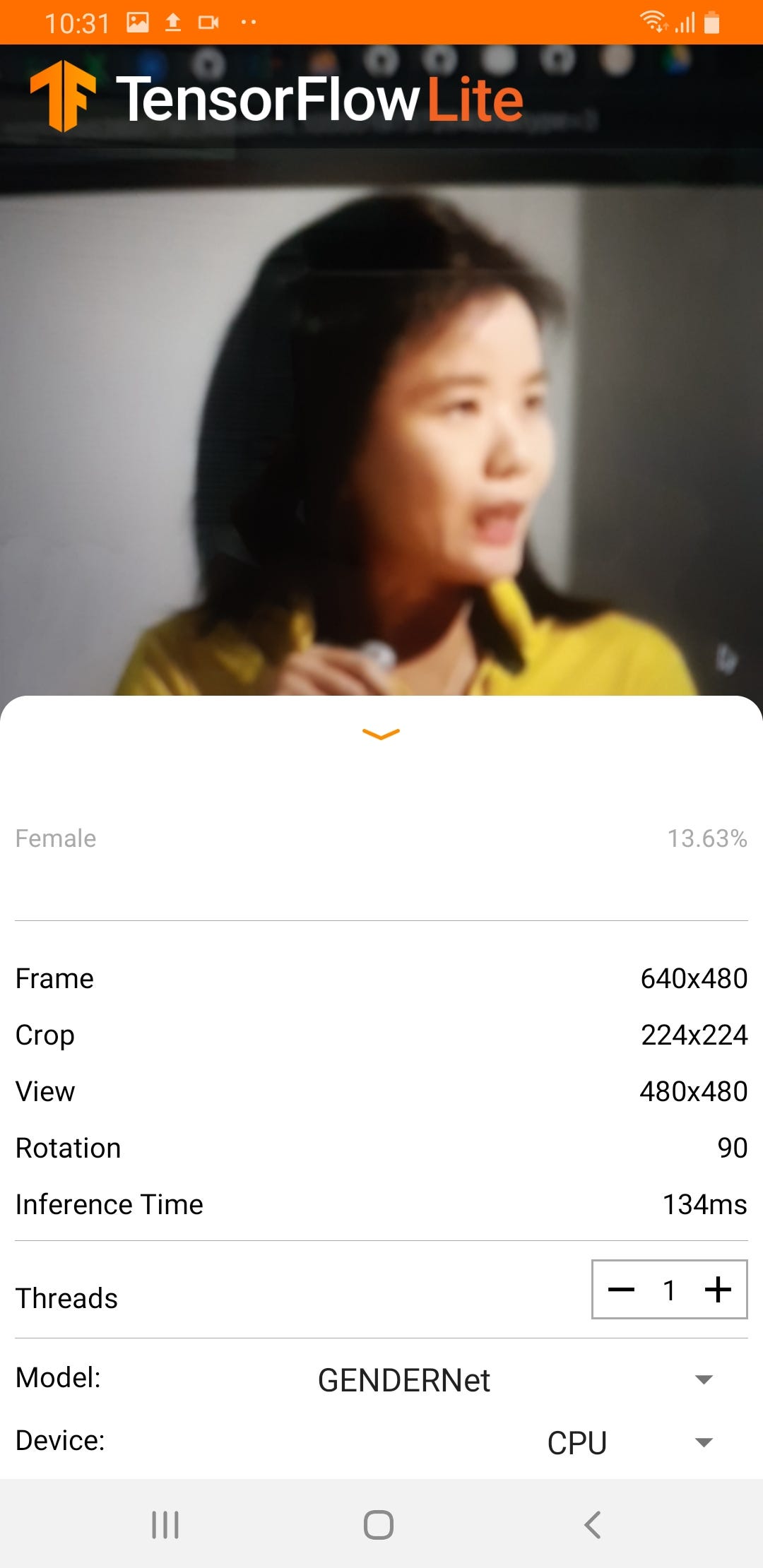

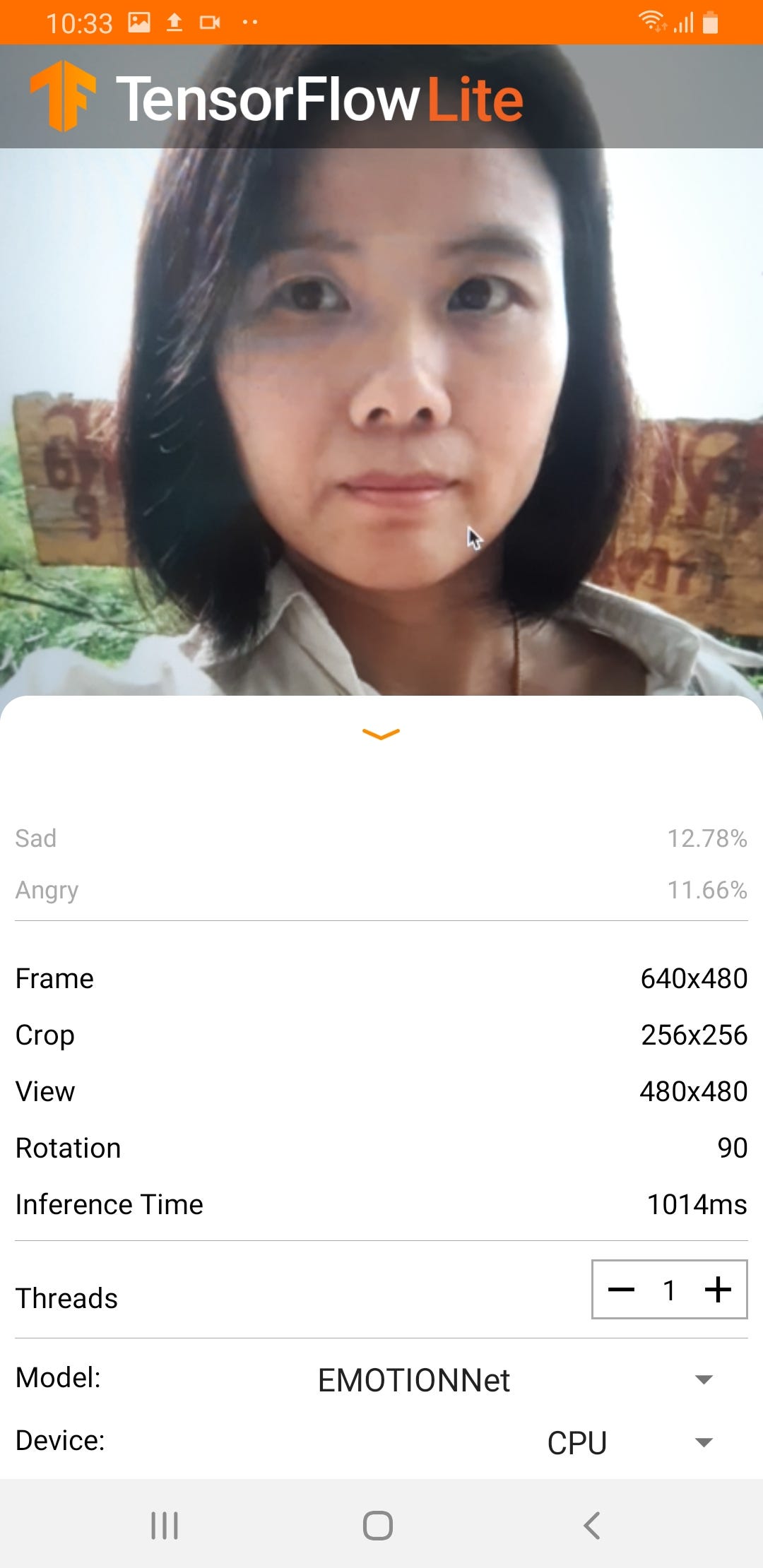

The image classification does not really perform well since the camera capture the whole frame and send to the classifier. The accuracy is not as good as the trained one. The following example is when we use our network for age, gender, emotion recognition.

I wander around and try to find the face cropping technique/support for android. The question is if we can use OpenCV, or dlib just like in python.

Face Detection using ML-kit

Since when we trained, we crop only the face to train. We have to find the way to detect face bounding box. Several blogs are around for this solution (https://medium.com/@luca_anzalone/setting-up-dlib-and-opencv-for-android-3efdbfcf9e7f). But I found that it is quite painful for me to recompile OpenCV and dlib for android. Also, there are many OpenCV version which we don’t know which is working and git for dlib is quite old.

Several recent solution uses Firebase (https://firebase.google.com/docs/ml-kit/android/detect-faces). The solution is interesting. But Firebase needs registration (https://www.nexmo.com/blog/2018/09/25/a-strawberry-or-not-classify-an-image-with-mlkit-for-android-dr). I came through the ML-kit which is the recent solution. I think it is the evolution of Firebase since the use is very similar. There is an example github (https://github.com/googlesamples/mlkit) with android application that we can build and it detects landmarks and bounding box like we had in Dlib. So, I guess that is the path for us. I try to code and try to understand how it works from the Camera image.

I find example code that use ML kit to crop faces into Bitmap and perform classification. The example code in the official git contains example sources: live camera, and still image. (https://github.com/googlesamples/mlkit/tree/master/android/vision-quickstart)

Thus, I start by taking the mlkit github as a starter since it it includes the library that detect face bounding and face landmarks for us with the acceptable frame rate. The following figures are the original examples run from the above github. It has object detection and image classification, face detector examples included. The left side is the face detector example while the right side is the custom image classification.

Next, we try to use our converted Tensorflow Lite model in this image classification example. Unfortunately, the interpreter cannot read our model. We cannot find a way to fix it. This example may use different Tensorflow Lite version.

The mixed of ML-kit and Tensorflow Lite 0.0.0 for face analysis

Due to the above problems, 1. Accuracy due to uncropped images 2. Incompatible tensorflow lite in ML-kit 3. OpenCV or Dlib needs works for compilation for Android.

I choose to start with ML-kit example and use the face detector example. This will allow me to obtain the crop face and send to Tensorflow Lite interpreter version 0.0.0 which is compatible to our models. I don’t use the version that comes with the example.

In ML-kit example, there are normal camera and cameraX activities. I choose to try on the normal camera activity. Here is the summary steps of the modification.

- Change the main activity to be LivePreviewActivity in AndroidManifest.xml

I change the main activity to be LivePreviewActiviy.java (not CameraX here) in AndroidManifest.xml.

<activity

android:name=".LivePreviewActivity"

android:exported="true"

android:theme="@style/AppTheme">

<intent-filter>

<action android:name="android.intent.action.MAIN"/>

<category android:name="android.intent.category.LAUNCHER"/>

</intent-filter>

</activity>2. Change the LivePreivewActivity.java in the constructor to add options on model selecting in onCreate(..).

options.add(….)Move the face detector to be called in this function too since when open the application , the camera will start and the face detector will scan the face bounding box,etc.

if (allPermissionsGranted()) {

if (cameraSource == null) {

cameraSource = new CameraSource(this, graphicOverlay);

setClassifier();

FaceDetectorOptions faceDetectorOptions =

PreferenceUtils.getFaceDetectorOptionsForLivePreview(this);

cameraSource.setMachineLearningFrameProcessor(

new FaceDetectorProcessor(this,faceDetectorOptions, mode.getMode(), classifier));

}

// createCameraSource(selectedModel);

} else {

getRuntimePermissions();

}I added the mode variable to remember which model is selected as well. I set the mode variable when the item is selected.

public synchronized void onItemSelected(AdapterView<?> parent, View view, int pos, long id) {

.... setClassifier();

}private void setClassifier () {

if (selectedModel == FACE_GENDER) {

mode.setMode(Constant.GENDER_OPTION);

try {

classifier = new GenderClassifier(this);

classifier.create(this,Constant.GENDER_OPTION);

} catch ( IOException e) {

LOGGER.e("Loading gender classifier");

}

}

else if (selectedModel == FACE_AGE) {

mode.setMode(Constant.AGE_OPTION);

try {

classifier = new AgeClassifier(this);

classifier.create(this,Constant.AGE_OPTION);

} catch ( IOException e) {

LOGGER.e("Loading Age classifier");

}

}

else if (selectedModel == FACE_EXPRESSION) {

mode.setMode(Constant.EXP_OPTION);

try {

classifier = new EmotionClassifier(this);

classifier.create(this,Constant.EXP_OPTION);

} catch ( IOException e) {

LOGGER.e("Loading Emotion classifier");

}

}

else if (selectedModel == FACE_RECOGNITION) {

mode.setMode(Constant.FACE_OPTION);

Classifier.create(this,Constant.FACE_OPTION);

}

At last, in this file, I modified the showResultsInBottomSheet function. Here by default, three predictions are displayed. Since our gender prediction can results in two predictions, the results.size() can be 2. Thus, I change the condition.

public void showResultsInBottomSheet(List<Classifier.Recognition> results) {

// limit only three results at most

if (results != null && results.size() >= 2) { ....and simply protect the last recognition if not exists.

if (results.size() > 2) {

Classifier.Recognition recognition2 = results.get(2);

if (recognition2 != null) {

if (recognition2.getTitle() != null)

recognition2TextView.setText(recognition2.getTitle());

if (recognition2.getConfidence() != null)

recognition2ValueTextView.setText(

String.format("%.2f", (100 * recognition2.getConfidence())) + "%");

}

}

else {

recognition2TextView.setText(" ");

recognition2ValueTextView.setText(" ");

}3. Change FaceDetectorProcessor.java. This one is important since the cropped face will be obtained here. The cropped face will be sent to the classifier for prediction.

Modify the constructor of FaceDetectorProcessor to pass along the classifier and the current context activity. Then, it will invoke generic classifier.

public FaceDetectorProcessor(Context context, FaceDetectorOptions options, int mode, Classifier classifier ) {

super(context);

Log.v(MANUAL_TESTING_LOG, "Face detector options: " + options);

detector = FaceDetection.getClient(options);

this.mode = mode;

this.context = context;

this.classifier = classifier;

}That is the change onSuccess () function to add parameter , Bitmap , which shows the frame that face detector is running.

protected void onSuccess(@NonNull List<Face> faces, @NonNull Bitmap bitmap, @NonNull GraphicOverlay graphicOverlay) throws MalformedURLException {

for (Face face : faces) {

i++;

graphicOverlay.add(new FaceGraphic(graphicOverlay, face, i));

final long startTime = SystemClock.uptimeMillis();

Bitmap croppedImage = BitmapUtils.cropBitmap(bitmap, face.getBoundingBox());

final List<Classifier.Recognition> results =

classifier.recognizeImage(croppedImage);

long lastProcessingTimeMs = SystemClock.uptimeMillis() - startTime;

LOGGER.v("Detect: %s", results);

System.out.println(i + " xxx" + results);

logExtrasForTesting(face);

LivePreviewActivity imageView = (LivePreviewActivity) context;

imageView.showObjectID(i); //send back results to display

imageView.showResultsInBottomSheet(results);

}In the above code, the bitmap is cropped and sent to the classifier in these lines.

Bitmap croppedImage = BitmapUtils.cropBitmap(bitmap, face.getBoundingBox());

final List<Classifier.Recognition> results =

classifier.recognizeImage(croppedImage);Then, the results are sent to LivePreviewActivity to display in the last two lines. Note the context activity is passed to this detector too for calling.

imageView.showObjectID(i); //send back results to display

imageView.showResultsInBottomSheet(results);The BitmapUtils.java is added with the function cropBitmap(..).

public static Bitmap cropBitmap(Bitmap bitmap, Rect rect) {

int w = rect.right - rect.left;

int h = rect.bottom - rect.top;

Bitmap ret = Bitmap.createBitmap(w, h, bitmap.getConfig());

Canvas canvas = new Canvas(ret);

canvas.drawBitmap(bitmap, -rect.left, -rect.top, null);

return ret;

}4. VisionProcessorBase.java

FaceDetectorProcessor is derived from VisionProcessorBase interface and in this class is where the frame image is captured.

The superclass of onSuccess() is also modified to add bitmap frame parameter.

protected abstract void onSuccess(@NonNull T results, @NonNull Bitmap image, @NonNull GraphicOverlay graphicOverlay) throws MalformedURLException;Modify the call to onSuccess in requestDetectImage() to add this bitmap parameter. This call to onSuccess is in the successListener.

private Task<T> requestDetectInImage(....)

{:return detectInImage(image)

.addOnSuccessListener(....

executor,

results -> {.... if (originalCameraImage != null) {

graphicOverlay.add(new CameraImageGraphic(graphicOverlay, originalCameraImage));

}

try {

VisionProcessorBase.this.onSuccess(results, originalCameraImage, graphicOverlay);

} catch (MalformedURLException e) {

e.printStackTrace();

} }}

At line:

VisionProcessorBase.this.onSuccess(results, originalCameraImage, graphicOverlay);is to send bitmap frame to onSuccess() in the FaceDetectorProcessor.

5. Create each classifier for each mode and set path of model and label properly. This is obtained from Tensorflow lite example: Classifier.java, ClassifierEfficientNet.java … copy from them. Add the dependency in build.graddle

implementation('org.tensorflow:tensorflow-lite:0.0.0-nightly') { changing = true }

implementation('org.tensorflow:tensorflow-lite-gpu:0.0.0-nightly') { changing = true }

implementation('org.tensorflow:tensorflow-lite-support:0.0.0-nightly') { changing = true }Here, there are three classifiers: AgeClassifier, GenderClassifier, EmotionClassifier, put under tflite folder.

Setting paths are as following example. The tflite and label files are under resource folder (res).

protected String getModelPath() {

// you can download this file from

// see build.gradle for where to obtain this file. It should be auto

// downloaded into assets.

return "age224.tflite";

}

@Override

protected String getLabelPath() {

return "age_label.txt";

}This is all, I think. The following is the example results. The frame per sec is pretty good for gender, age classification but slow for the expression classifier. The expression classifier is based on ResNet with input 256x256 which is quite large. We try to use the black and white image (48x48) but it seems that the ML kit Tensorflow Lite we used check the number of channel to 3. We cannot find the way to get around; thus, we use this color model. The accuracy of the gender classification is good while the age is not so good. You are welcome to try other own models. Cheers!!

The code of all this is available at https://github.com/cchantra/FaceAndroid

Any comments and suggestion are welcome. Please accept my apology if there are any mistakes.